The Alpha Centauri system consists of two stars and at least one planet. It takes light about 4 years to go there from our Sun. Distances are not drawn to scale (otherwise you wouldn’t see anything).

The last two decades have seen significant progress in our understanding of the universe. While previously we only knew the planets and moons in our own solar system, we are now aware of many planets orbiting distant stars. At last count, there are more than 800 of those extrasolar planets (see http://exoplanet.eu/). Even in the star system closest to our sun, the alpha Centauri system, an extrasolar planet candidate has been detected recently.

What would it take to visit another star? This question has been discussed seriously for several decades now, even before any extrasolar planet had been discovered. The point of this blog post will be to recall how hard interstellar travel is (answer: it is really, really hard).

The challenge is best illustrated by taking the speed of the fastest spacecraft flying today: That is the Voyager 1 space probe, flying at about 17 kilometers per second away from our sun. At this speed, it would take Voyager 70,000 years to reach alpha Centauri, if it were flying into that direction. It is safe to assume that no civilization is that patient (or that long-lived), waiting through several ice ages to hear back from a space probe launched tens of thousands of years ago. Thus, faster space probes are called for, preferably with a mission duration of only a few decades to reach the target star.

Why travel to another star?

First, however, we should ask: why would one want to travel there? The short answer is that trying to explore another planet without going there is quite challenging.

Of course, as the detection of extrasolar planets shows, one can at least obtain some information about the planet’s orbit and its mass just by looking at the star. It is even possible to figure out a bit about the planet’s atmosphere, by doing spectroscopy on the small amount of sunlight that is reflected from the planet. In other words, one tries to see the colors of the planet’s atmosphere. This has been achieved recently for a planet orbiting in a star system 130 light years from earth (see http://www.eso.org/public/news/eso1002/). The spectrum that can be teased out from this method is quite rough, since it is extremely challenging to see the planet’s spectrum right next to the significantly brighter star. In any case, this is a promising way to learn more about planetary atmospheres. In the best possible scenario, changes in the atmosphere might then hint towards life processes taking place on the planet.

However, measuring the spectrum in this way only gives an overall view of the planet’s color. It does not reveal the shape of the planet’s surface (the clouds, oceans, continents etc.). In order to take a snapshot of a planet’s surface with a good resolution, one would need to build gigantic telescopes (or telescope arrays). We can illustrate this by taking alpha Centauri as an example, which is our closest neighboring star system at only about 4 light years distance. If we wanted to resolve, say, 1km on the surface of the planet, then we would need a telescope with a diameter the size of the earth! At least, this is the result of a rough estimate based on the standard formula for the resolving power of a telescope.

Therefore, it seems one would need to travel there even if only to take a look and send back some pictures to earth. More ambitious projects would then involve sending a robotic probe down to the surface of the planet (just like the Curiosity rover currently exploring Mars), or even send a team of astronauts. However, as we will see that interstellar travel is really hard, we will be content in our estimates to take the most modest approach, i.e. an unmanned space probe, with the goal to take some close-up pictures. Probably that would mean a spacecraft of about a ton (1000 kg), since that is the size of probes like the Mars Global Surveyor or Voyager 1. Possibly that mass could be reduced, but even if the imaging and processing system were only a few tens of kilograms, one still needs a power source and a radio dish for communicating back to earth.

The options

The Voyager spacecraft was carried up by a rocket and then used, in addition, the gravitational pull of the large planets Jupiter and Saturn to reach its present speed. However, as we have seen above, 17 km per second is just not fast enough. It is a thousand times too slow.

If you want to reach alpha Centauri within 40 years, you need to travel at 10 percent of the speed of light, since alpha Centauri is 4 light years away. Which concepts are out there that provide acceleration to speeds of this kind?

Usual rocket fuel is not good enough. What could work conceivably are ideas based on nuclear propulsion. In Project Orion, a study from the 50s, it was suggested to use nuclear bombs being ignited at the rear end of a spacecraft. Each explosion would give a push to the craft, against a plate. In this way, a spacecraft of about 100,000 tons could reach alpha Centauri in about 100 years. Later project studies (like Project Daedalus) envisaged nuclear fusion of small pellets in a reaction chamber. Again, the design called for a spacecraft on the order of about 50,000 tons. Since the helium-3 required for the fusion pellets is very scarce on earth, it would have to be mined from Jupiter.

If you think these designs sound crazy, you are not alone. Launching such a massive spaceship filled to the rim with nuclear bombs from the surface of the earth is probably not going to happen. And constructing these gigantic ships in space, even though safer, would probably require resources beyond what seems feasible.

All of these nuclear-powered designs are really large spaceships, because they have to carry along a large amount of fuel. The scientific payload would be only a very small fraction of the total mass.

Carrying along the fuel is obviously a nuisance, since a lot of energy is used up for accelerating the fuel and not the payload. This can be avoided in schemes where the power is generated on the home planet and “beamed” to the spaceship. That is the concept behind light sails.

Light sails

One of the less obvious properties of light is that is can exert forces, so called radiation forces. These forces are very feeble. For example, direct sun-light hitting a human body generates a push that is equivalent to the weight of a few grains of sand. That is why you will notice the heat and the brightness, but not the force. Nevertheless, the force can be made larger by increasing the surface area or by increasing the light intensity. And that is the concept behind light sails: Unfold a large reflecting sail and wait for the radiation pressure force to accelerate the sail. Even though the accelerations are still modest, they are good enough if you can afford to be patient. A constant small acceleration acting continuously over hundreds of days can bring you to considerable speeds.

The first proposals for light sails in space seem to have originated from the space flight pioneers Konstantin Tsiolkovsky and Friedrich Zander during the 1920s. The radiation pressure force had been predicted theoretically in the 19th century by James Clerk Maxwell, starting from his equations of electromagnetism, although very early speculations in this direction date back even to Johannes Kepler around 1610. The force had been demonstrated experimentally around 1900 by Lebedev in Moscow and by Nichols and Hull at Dartmouth College in the U.S.

For voyages within the solar system, one could use the light emanating from the Sun. In that case, the craft would be termed a solar sail.

First demonstrations of solar sails

The first demonstrations of solar sails failed, but the failure was not related to the sails themselves. In 2005, the Cosmos 1 mission was launched by the Planetary Society, with additional funding from Cosmos Studios. It was launched onboard a converted intercontinental ballistic missile from a Russian submarine in the Barents Sea. Unfortunately, the rocket failed and the mission was lost. The same fate awaited NanoSail-D, which was launched by NASA in 2008 but again was lost due to rocket failure.

In 2010, the Japanese space agency JAXA demonstrated the first solar sail that also uses solar panels to power onboard systems. This successful project is named IKAROS. It demonstrated propulsion by the radiation pressure force, and after half a year it passed by Venus, taking some pictures. IKAROS is still sailing on. The square-shaped IKAROS sail measures 20m along the diagonal, and it is made up of a very thin plastic membrane, about 10 times thinner than a human hair. The sail is stabilized by spinning around. In this way, the centrifugal force pushes the sail outward from the center, so it does not crumple.

The overall radiation pressure force on IKAROS is still tiny: Only about a milliNewton, which (at a mass of 315 kg) translates into an acceleration that is more than a million times smaller than the gravitational acceleration “g” on Earth. Nevertheless, in 100 days, such a tiny acceleration would already propel the craft over a distance of about 100,000 km. It should be noted that the motion of IKAROS towards Venus was due to the initial velocity given to the craft, not the radiation pressure force (which, as this example demonstrates, would have been too small to go to Venus in half a year).

Artist’s depiction of the Japanese IKAROS solar sail (Artist: Andrzej Mirecki, on Wikimedia Commons, from the IKAROS Wikipedia page)

NASA successfully flew a smaller mission, NanoSail-D2 at the end of 2010. The Planetary Society is currently building LightSail-1 as a more advanced successor to Cosmos 1.

Laser sails

For interstellar travel, however, the sun-light quickly becomes too dim, as the sail recedes from the sun. In that case one needs to focus the light onto the sail, such that the radiation power received by the sail does not diminish as the sail moves away. This could be done either via gigantic mirrors focussing a beam of sun-light, or by a large array of lasers. Laser sails were analyzed in the 1980s by the physicist and science-fiction write Robert L. Forward and subsequently by others.

What are the challenges faced by laser sails?

In brief: In order to get a sufficient acceleration, one wants to have a large beam power and a very thin, light-weight material. However, the beam will tend to heat the sail and thus the material should be able to withstand large temperatures. In addition, the space craft will fly through the dust and gas of interstellar space, which rips holes into the sail and again tends to heat up further the material.

In the following, we are going to go through the most important points, illustrating them with estimates.

The power

Since the radiation pressure force is so feeble, a lot of light power is needed. Of course, all of this depends on the mass that has to be accelerated. Suppose for the moment a very modest mass, of only 100 kg.

In addition, the power needed will depend on the acceleration we aim for.

How large is the acceleration we would need for a successful decades-long trip to Alpha Centauri? It turns out that the standard gravitational acceleration on earth (1 g) would be good enough by far: If a spacecraft is accelerated at 1 g for about 35 days, it will have already reached 10% of the speed of light. Since the whole mission takes a few decades, we can easily be more modest, and require only, say, 10% of g. Then it would take about a year to reach 10% of the speed of light. That acceleration amounts to increasing the speed by 1 meter per second every second.

So here is the question: how much light power do you need to accelerate 100 kg at a rate of 1 meter per second every second?

The number turns out to be: 15 Giga Watt!

And that is assuming the optimal situation, where the light gets completely reflected, so as to provide the maximum force.

How large is 15 Giga Watt? This amounts to the total electric power consumption of a country like Sweden (see Wikipedia power consumption article).

Still, there is some leeway here: We can also do with an acceleration phase that lasts a decade, at one percent of g. Then the power is reduced down to a tenth, i.e. 1.5 Giga Watt. This is roughly the power provided by a nuclear power plant, or by direct sun light hitting an area of slightly more than a square kilometer (if all of that power could be used).

The area and the mass

How large would one want to make the sail? In principle, it could be quite small, if all that light power were focussed on a small area.

However, as we will see, the heating of the structure is a serious concern, and so it is better to dilute the light power over a larger area. As a reasonable approach, let’s assume that the light intensity (power per area) should be like that of direct sunlight hitting an area on earth. That is 1 kilo Watt per square meter. In that case, the 1.5 Giga Watt would have to be distributed over an area of somewhat more than a square kilometer. So the sail would be roughly a kilometer on each side.

The area is important, since it also determines the total mass of the sail. In order to figure out the mass, we also need to know the thickness and the density of the sail. The current solar sails mentioned above each have a thickness of a few micrometer (millionths of a meter), thinner than a human hair.

Even if we just assume 1 micrometer thickness, a square kilometer sail would already have a total mass of 1000 kg (at the density of water). This shows that our modest payload mass of 100 kg is no longer relevant. It is rather the sail mass itself that needs to be accelerated.

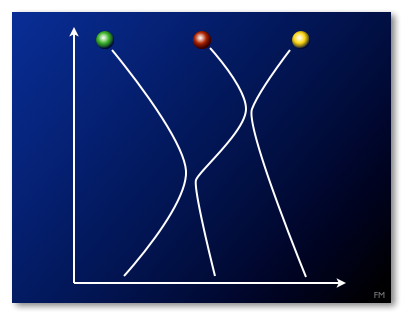

Once the sail mass is larger than the payload mass, we rather have to ask what is the acceleration at a given light intensity (e.g. 1 kW per square meter, as assumed above). If the light intensity is fixed, the acceleration becomes independent of the total sail area: Doubling the area doubles the force but also the mass.

Typical proposals for laser-sail missions to reach 10 % of the speed of light assume sail areas of a few square kilometers, total masses on the order of a ton (sail and payload), total power in the range of Giga Watt, and accelerations on the order of a few percent of g.

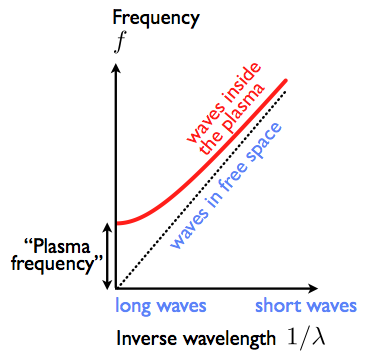

Focussing the beam

The light beam, originating from our own solar system, has to be focussed onto the sail of a few km diameter, across a distance measuring light years. Basic laws of wave optics dictate that any light beam, even that produced by a laser, will spread as it propagates (diffraction). To keep this spread as small as possible, the beam has to be focussed by a large lens or produced by a large array of lasers. Estimates show that one would need a lens measuring thousands of kilometers to focus the beam over a distance of a light year! Thus, any such system would have to fly in space, which again makes power production more difficult.

The requirements on the size of the lens can be relaxed a bit by having the acceleration only operate during a smaller fraction of the trip. However, even if the beam is switched on only during the first 0.1 light years of travel (as opposed to the full 4 light years), a thousand kilometers are the order of magnitude required for the size of the lens.

The heat

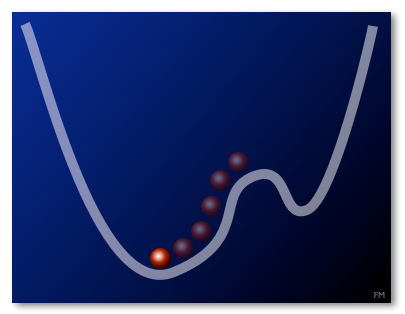

Suppose that power generation were no problem. Suppose you could have cheap access to a power source equivalent to the power consumption of a country like Germany (60 GW) or the US (400 GW). What would be the limit to the acceleration you can achieve? It turns out that powers of this magnitude would not even be needed, since at some point it is not the total power that provides the limiting factor.

The problem is once you fix the material density and the thickness, you can increase the acceleration only by increasing the intensity, i.e. the light power impinging on a square meter. However, at least a small fraction of that power will be absorbed, and it will heat up the sail. The problem is known to anyone who have left their car in direct sunlight, which makes the metal surface very hot. In space, an equilibrium would be established between the power being absorbed and the power being re-radiated from the sail as thermal radiation. Typical materials considered for light sails, like aluminum, have melting points on the order of several hundred to thousand degrees centigrade.

Ideally, the material would reflect most of the light it receives from the beam, absorbing very little. The little heat flux it receives should be re-radiated very efficiently at other wave lengths. Tayloring the optical properties of a sail in this way is possible in principle. However, usually it also means the thickness of the sail has to be increased, e.g. to incorporate different layers of material with a thickness matching the wavelength of light (leading to sails of some micrometers thickness).

In some of the current proposals of laser sails for interstellar travel, it is this heating effect that limits the admissible light intensity and therefore the acceleration.

Several different materials are being considered, among them dielectrics (rather thick but very good reflectivity, little absorption) and metals like aluminum. In addition, one may replace optical light beams by microwave beams, which can be generated more efficiently and be reflected by a thin mesh. The downside of using microwaves is that their wavelength is ten thousand times larger, so the size of the lens is correspondingly larger as well.

The dust

As the sail flies through space, it will ecounter gas atoms and dust particles. Admittedly, matter in interstellar space is spread out very thin (that is why there is almost no friction to begin with!). Nevertheless, the Interstellar Medium is not completely devoid of matter. Somewhat fortunately for light sails, our sun (and its nearest stars) is sitting inside a low-density region, the so-called “Local Bubble“. In the few light years around our sun (in the Local Interstellar Cloud), the density of hydrogen atoms is about 1 atom per ten cubic centimeters, vastly smaller than the density of air. That is more than a thousand times less atoms per cubic centimeter than in the best man-made vacuum. In addition, there are grains of dust, with sizes on the order of a micrometer.

It is quite simple to calculate how many atoms will hit the surface of the sail: Just take a single atom of the sail’s surface. As the sail flies through space, this atom will encounter a few of the hydrogen atoms of the interstellar medium. How many? That depends on the length of the trip (a few light years) and the density of hydrogen atoms. All told, for a typical atomic radius of 1 Angstrom (0.1 nanometer), our surface atom will encounter about 100 hydrogen atoms. That means roughly: If all of the atoms were to stick to the surface, they would pile up 100 layers thick, which would be on the order of 10 nanometers.

That in itself does not sound dramatic. The crucial significance of the problem is realized only when one takes into account the speed at which the hydrogen atoms and other particles are bombarding the sail: That is 10 percent of the speed of light, since the sail is zipping through space at that speed! Being hit by a shower of projectiles traveling at 10 percent the speed of light does not bode well for the integrity of the sail.

It turns out that the speed itself may actually help. This is because an atom zipping by at 10% of the speed of light has only very little time to interact with the atoms in the sail. For two atoms colliding at this speed, it is better not to view an atom as a solid, albeit fuzzy, object of about 1 Angstrom radius. Rather, each atom consists of a point-like nucleus and a few point-like electrons. When two such atoms zip through each other, it is very unlikely that any of those particles (electrons and nuclei) come very close to each other. They will exert Coulomb forces (on the order of a nanoNewton), but since they are flying by so fast, the forces do not have a lot of time to transfer energy. In this regard, faster atoms really do less damage. Nevertheless, there is some energy transfer, and the biggest part of it is due to incoming interstellar atoms kicking the electrons inside the sail. In a quick-and-dirty estimate (based on some data for proton bombardment of Silicon targets), the typical numbers here are tens or hundreds of keV of energy transferred to a sail of 1 micrometer thickness during one passage of an interstellar atom. This produces heating (and ionization, and some X ray radiation).

Powerful computer simulations are nowadays being used to study such processes in detail (see a 2012 Lawrence Livermore Lab study on energetic protons traveling through aluminum).

You can find a general (non-technical) discussion of this crucial problem for laser sails on the “Centauri Dreams Blog“. The overall conclusion there seems to be optimistic, but the story also does not seem to be completely settled.

The problem could be reduced somewhat by having the acceleration going on only for a shorter time, after which the sail is no longer needed. However, then the acceleration needs to be higher, with a correspondingly larger intensity and heating issues. In any case, the scientific payload needs to be protected all the way, even if the sail could be jettisoned early.

The flyby

Once the space probe has reached the target system, things have to go very fast. At 10 % of the speed of light, the probe would cover the distance between the Earth and the Sun in a mere 80 minutes, and the distance between Earth and Moon in only a second (!). That means, there is precious little time to take the pictures and do all the measurements for which one has been waiting several decades. Presumably the probe would first snap pictures and then later take its time to radio back the results to Earth, where they would arrive 4 years later.

While the probe is flying through the target star system, it is also in much greater danger of running into dust grains and gas atoms, and the scientific instruments need to be protected against that. If the probe were to hit the planet, that could be catastrophic, since even a 1000 kg probe traveling at 10% of the speed of light would set free an energy of about ten hydrogen bombs.

Robert Forward has proposed an ingenious way to actually slow down the probe: This involves two sails, one of which is then jettisoned and afterwards serves as a freely floating reflector to send the light beam back onto the end of the sail that faces away from the Earth. This approach, however, requires even much larger sails and resources.

Conclusion

Interstellar travel is really, really hard if you are not very patient. However, using laser sails, it is not a purely fantastic outlandish idea anymore. In addition, concrete steps towards testing the concepts are being taken right now, with modest solar sails deployed and planned by the Japanese space agency JAXA , by NASA, and by the Planetary Society.

Further information

- A presentation on the Planetary Society’s LightSail-1 design.

- The Centauri Dreams Blog, commenting in a serious way on original research papers relevant for interstellar travel.

- The Icarus Interstellar website, a foundation dedicated to achieving interstellar travel within the next 100 years. See their “Project Forward“, which analyzes the potential of laser sails.

- The 100 Year Starship Initiative, seed-funded by DARPA, to make human interstellar travel possible.

- A July 2012 opinion piece “Alone in the Void” in the New York Times, by the astrophysicist Adam Frank, claiming that human interstellar travel will most likely not happen during the next thousands of years, though without any serious discussion of any interstellar travel concept. And a reply by Paul Gilster on Centauri Dreams, with many comments criticizing the somewhat superficial opinion piece.

- The report of a 1999 study performed by Geoffrey Landis, with many of the earlier references.

- Forward, R. L. (1984): “Roundtrip Interstellar Travel Using Laser-Pushed Lightsails”, Journal of Spacecraft and Rockets, Vol. 21, p. 187-195. The original pioneering paper.